The Weekly Anthropocene Interviews: Ozy Brennan

An effective altruist animal advocate, life advice writer, and philosopher.

Ozy Brennan is a writer, philosopher, community leader, and animal advocate long associated with the pioneering Effective Altruism movement. They write the popular Substack Thing of Things.

A lightly edited transcript of this exclusive interview follows. This writer’s questions and remarks are in bold, Ozy Brennan’s responses are in regular type. Bold italics are clarifications and extra information added after the interview.

Can you share a little bit of your personal take on what effective altruism is, and how did you get into it? Because I think it's a really fascinating, deeply honorable, and incredibly morally important movement. I wrote an article about it. But I worry that for some of the public now, it might be primarily known as “Isn't that the weird philosophy that the criminal crypto billionaire really liked?” I think effective altruism has suffered some serious inaccurate reputational damage, so I think it deserves to be reintroduced by a figure like yourself.

In the same way that “Feminism is the radical notion that women are people” is accurate, the definition of effective altruism is fundamentally “People who are trying to use evidence and reason to figure out the best possible ways to do good in the world.”

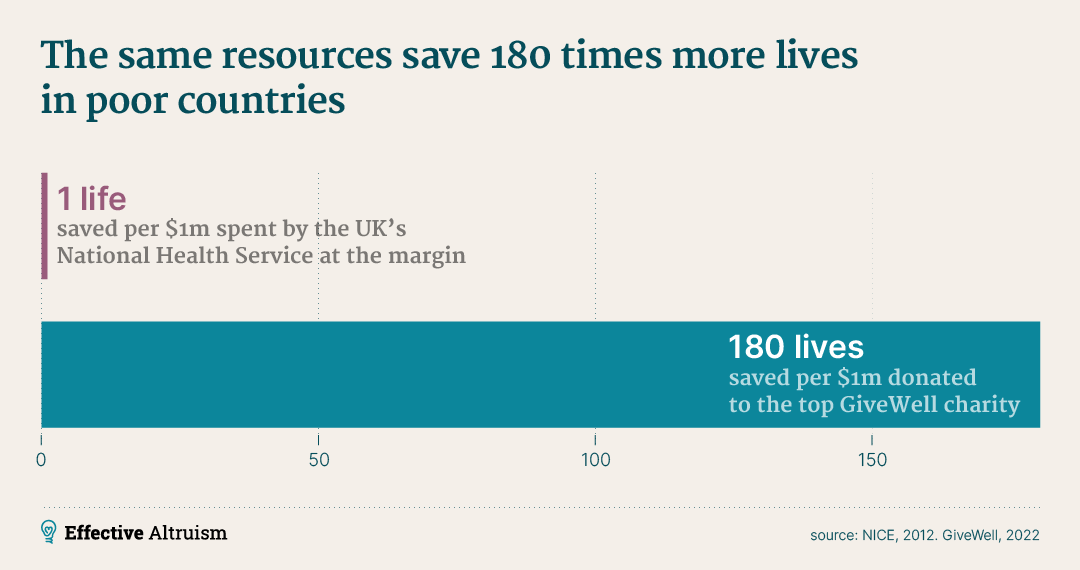

You can get very, very weird, with effective altruism, and we can talk about that kind of thing later. But when I think about the very basic case for effective altruism, I think about a wonderful paper by Toby Ord called “The moral imperative for cost effectiveness in global health.” What it says if you look at the gap between the most effective and the least effective HIV charities, it is thousands.

The best charities are thousands of times better than the least effective charities. If you expand it to global health, the difference in cost-effectiveness is tens of thousands. If you expand to all global health programs that ever existed, it is literally infinite, because smallpox eradication saved money on treating smallpox because we no longer have to treat smallpox, making it literally infinitely cost-effective.

For more background, here are some overviews of effective altruism from The Weekly Anthropocene and Thing of Things.

The Weekly Anthropocene: Effective Altruism

In this Deep Dive, I’d like to offer a “10,000-foot view” summary of effective altruism, often known simply as “EA”, a fascinating new socioeconomic movement. If you’ve previously read about effective altruism, this post will probably seem ludicrously oversimplified. If you haven’t heard of it before, or the name just rings a faint bell, know that this is an extremely brief introduction to a complex and multi-faceted school of thought

There’s the sort of common sense idea that you should not give your money to things that don't work. You shouldn't give your money to things that are actively counterproductive. You shouldn't give your money to some charity where the people are going to just steal the money and take it off to the Bahamas, right?

But there’s also this common intuition that most charities are more or less the same, and that’s not true. Like, if you're treating Kaposi's sarcoma, which I think is the least effective one in Toby Ord's HIV charities paper, and compare it to distributing condoms, which was one of the most effective1. People think, oh, those are about the same. They're both good things to donate to, which they absolutely are. They're good things to donate to. If you're donating to help people in the developing world treat their Kaposi's sarcoma, that is fantastic. But you can improve people's lives hundreds times more by switching to distributing condoms.

And another thing that I think is important to point out is that it is not intuitively obvious which of these are true. You could imagine a different world where it's like, oh, yeah, the Kaposi’s sarcomas treatment is super cost effective, condoms not so much. You have to actually go out into the world and check! Because going intuitively, this all seems nice, but when you check the cost-effectiveness there’s a huge difference. It's just an enormous gap.

Yeah, so I absolutely agree with that. That's basically the version of effective altruism that I wrote about. Like, a little public health accountancy can create huge, massive gains in human well-being. That’s great!

But also, maybe just through proximity at the same Bay Area social groups, EA has become really associated with the ideologies that you wrote about as TESCREAL thought. Which you pointed out in your great Asterisk article is perhaps an oversimplified grouping of disparate ideas, but they’re often seen together as a techno-messianic sort of viewpoint with very strong views about the ethics of long termism and AI and stuff, that I think are substantially more controversial than the idea that you should try to make sure your charity gets a good bang for its buck.

And I find this stuff intellectually fascinating! But cards on the table, my personal p(doom) is minuscule. I think that it's almost a technological version of religious eschatology that we've created here, fearing that AGI will unilaterally save or destroy the world very soon, and for a lot of reasons I doubt that. I personally care a lot more about malaria bed nets than AI safety. And it seems not obvious that those things became sort of closely linked. So, can you discuss that wing of EA, and that bundle of concepts and philosophies known as TESCREALism?

The thing that the TESCREALists have in common is the idea that the future is going to be very weird and that’s going to be important. As a sociological fact, the reason that EA is closely tied to TESCREALism is that Eliezer Yudkowsky got interested in this effective charity thing that was coming out of Oxford and New York, and wound up promoting them a bunch in his blog that was also about how AI is really important and he is very scared about it. But I think that concerns like this are going to end up coming up regardless if you start taking, “We should try to do the best thing with our money and resources” very seriously.

I am fundamentally kind of a normal person. I am somebody who wants to do normal things and I'm suspicious of all this weird stuff. But what ends up happening is that when you start taking the question very seriously, “What is the best thing to do with your money?” it's very plausible that the answer is something that people have not really been thinking about. Because most people have not been trying to answer that question very seriously at all. And it would be kind of surprising, in a way, if it turns out that the best thing to do with your money is this thing that we had already thought of and think is a good idea. It seems somewhat unsurprising that maybe it's kind of a strange thing that you might feel sort of reluctant about. For me, I think I noticed through animal advocacy, where you start out being like, this factory farming thing seems pretty terrible, right? And then you're like, okay...chickens are more important to think about than cows, just on an animal welfare perspective. From a climate perspective, it's different. But if you're just looking at animal welfare perspective, you know, many, many more individuals are at stake. You get less meat per chicken than per cow, so more chickens are affected for a given amount of meat. Then you're like, okay, so we should be particularly worried about chickens.

Incidentally, that argument is why I personally eat dairy products but not chicken meat.

Yeah. And then you move on a step and you're like, okay, but...fish? Often you have to produce many fish individuals to get edible meat, it depends on the species. But there are certain species of fish that are, you know, as small as chickens. So we are maybe very concerned about fish.

And then you can go even farther and you're like…shrimp? Shrimp are tiny. So truly gigantic numbers of shrimp are killed for food, potentially causing vast suffering. And then you start being worried about it. And then you're like, insects? You start thinking, okay, let us think about the capabilities of these animals, let's set aside our human-centric biases and let's think about just what are the capabilities of these animals and how many of them are we affecting? You start going, uh…bees? Are we worried about bees? And then you get into this sort of very weird ideology, without any sort of sociological context at all, by trying to set aside your pre-existing biases and trying to reason from these basic moral intuitions.

Relatedly, Ozy wrote a great Asterisk article on Martha Nussbaum's Justice for Animals.

And if you carry through your basic moral intuitions, they get strange. If you carry your moral intuitions through into very strange places, then at some point you're like, maybe I don't trust my reasoning that much. Maybe you're like, okay, it seems like I've done all this reasoning, and it seems like on my piece of paper, what I got is “We need to destroy the Amazon rainforest because of all the suffering of the ants there.” And then you are like, okay, that's the answer I got, but no, that doesn't seem right.

But on the other hand, if you are not having opinions that are even a little bit strange, I kind of wonder what we're doing here, right? It is not the case that if you sit down to go, okay, we're going to try and answer those questions that nobody else has answered and that nobody else is working on, the answer will be completely normal.

This is what that classic Scott Alexander post wrote about, right? Attempting to make the world better in principled ways might sometimes massively backfire. There’s that argument that Engels was the most successful effective altruist in history just from a bang-for-your-buck perspective because he successfully identified Marx as a guy writing a political philosophy that could change the course of history, and financially supported him to make it possible for him to do it. And a few decades later, whoops, gulags!

EA is this interesting mix that is a very vibrant intellectual space, but like you said, and you just explained it very well, following your moral intuitions can lead to strange places. I did this interview because I want to promote effective altruism, but there are some areas where I have questions. Some cause areas EA supports are very, very well verified and morally clear-cut, like saving kids from preventable diseases in sub-Saharan Africa.

And then there’s stuff like AI safety, which from a non-charitable perspective could be viewed as paying already-rich people in the Bay Area to do thought experiments about hypothetical machine gods.

I think that the critique that you're making of AI safety was a much more reasonable critique 10 years ago than it is right now, because a lot of what people are working on in the AI safety space right now are things that are very much non-philosophical. There are things like trying to figure out with currently existing generative AI, how we would test it, how we figure out what its capabilities even are, figuring out how to understand the way the current generative AI is thinking. Often a lot of policymaking lobbying, trying to get policymakers in national security and other areas to like try to understand these areas and be able to make sensible policy about them. It seems not unreasonable to me to try to influence policy of the U.S. government, the most powerful government in the world.

I agree. I work on energy and climate policy issues trying to influence the U.S. government! I guess what I'm saying is not so much that it isn't important, but it's the uncertainty. With, like, vitamin A supplementation in northern Nigeria, you will save kids from blindness, and it's pretty broadly agreed that it's saving kids from blindness.

But with any given AI intervention, the reason I'm not in that field or even writing about that field is because I don't even know which side is correct. Like, let's say you just try to limit development of AI. Are you saving the world from a Terminator apocalypse? Or are you preventing the discovery of amazing new life-saving drugs because of AI-modeled protein folding? I wouldn't even know which side to be on in any given AI policy question.

I think it should be debated, but it feels like a super, super, uncertain space, that ends up sharing the EA conceptual framework with much more directionally certain stuff about how you save people's lives.

Yeah. Effective charities aren’t absolutely certain of course, but I can see the case that there is a different, a sort of more bounded uncertainty in the GiveWell situation.

I feel like AI has a directional uncertainty. Like, we might still be uncertain about exactly how much good a well-evaluated charity does, I at least know the sign of that charity is positive in terms of reducing suffering. With any AI advocacy at all, it’s so uncertain that I feel like I personally wouldn’t know if I’m making the world better or worse.

This is a thing that I have a lot of problems about as an animal advocate. It’s called the meat-eater problem. Basically, there is a reasonable case that you could make that developing poor countries is negative if you care about animals, because better-off people eat more meat and the meat ends up being in these horrible factory farm conditions instead of the relatively okay conditions of, like, a pig in a village in Kenya. Because as countries develop, their demand for meat just expands enormously. And I think that most of the people I know who care about animals are just like, okay, you can't say “We need to trap sub-Saharan Africa in poverty because they're gonna eat meat.” That's a supervillain thing to do! This sort of gets into a broader thing, which is, especially when you get into animals, especially especially in factory farming, there's often a really sharp tradeoff between the welfare of humans and the well-being of other species. And, you know, that's kind of what the entire field of environmentalism is about.

What are your thoughts on technological progress vis-a-vis animal suffering? Efficient factory farming has led to horrendous suffering, but I really hope that new developments like plant-based meats, microbial farming products like Solein, and cell-cultivated meat might soon greatly reduce suffering. I'm really, really hoping that factory farming will be, I don't know, a booster rocket stage we can eventually discard or something, that we can move through and past factory farming.

What your thoughts are on the best paths forward for win-win options that reduce animal suffering without compromising human well-being? Do you think that meat substitutes are the key way forward? Do you think there should be more efforts to focus on just making factory farming a little bit nicer on the margin? Like with bigger cage sizes, or new things. There’s talk about AI analysis of pig grunts to identify when they’re distressed. There’s a lot of possible pathways.

So in the long term, decades from now, the ideal is that every animals that is farmed has a good life. I am not a deontological vegan that says we should never farm animals and eat them, I just want to make sure they have a good life. And we cannot meet the current demand for meat and raise meat humanely. We just can’t. So ultimately, the solution has to be plant-based meats, cultivated meats, et cetera, because we have tried for decades to convince people to become vegan, and people will not just become vegan. We’ve tried that, and I don’t think it’s working very well.

In the short term, I feel concerned about alternative meats. From 2017 to 2020, the market size of alternative meats about doubled. Since 2020, it’s been basically flat. A lot of people tried it and then chose not to continue eating it. Because it’s expensive, or because they thought “this is a cool novel thing” but then didn’t use it regularly. We had a bunch of very big rollouts of plant-based meat to various companies that ended up sort of rolling it back in after sales were disappointing. There's a really interesting study, Malan 2022, looking at the rollout of Impossible Meat at a college campus. Which is sort of a best-case scenario, because college campuses have a set cost of the meal plan. So the Impossible Meat itself is not any more expensive than the animal meat in this case. And of course it's equally convenient, you can just walk over to the Impossible Meat section in the dining hall.

There was lots of interesting data analysis, but the top line result was that two thirds of the meat that got sold was in fact animal meat. Even in this very good situation, with Impossible Meat that regularly scores better than animal meat on blind taste tests, people still eat animal meat. And in fact, this is a little bit of an overestimate because there's some evidence in the data that people who wanted Impossible Meat were going from dining halls that didn't serve Impossible Meat to dining halls that did.

I think it makes sense that people are not just going to switch to alternative meats. Even setting aside expense, there’s identity stuff, there’s status stuff, there’s stuff about not being the sort of person who eats alternative meats. You know, there's something about it being masculine or all-American or whatever to throw some hot dogs on the grill and not to eat tofu dogs. And there's a point that Jacob Peacock has made, which is that for more than a century we have had a perfectly good plant-based substitute for butter. It's called margarine. Margarine has not displaced butter. It tastes fine, it’s cheaper, it's equally convenient, you can get it at any grocery store. But approximately nobody is like, “I eat meat in general but I switched away from butter to margarine.” It's a bizarre effective altruism thing to do. Normal people don't do that. In many ways, it being cheaper has given it a worse reputation because people think of it as, like, poor people food. What do you do with that?

I don't know. I guess I try to apply sometimes in my head the successful clean energy model. Which is, you know, we invested in research and development, and then we got better technology, and then it came down the cost curve, and now the levelized cost of energy and even the cost to set it up is much cheaper, and now we've got solar and wind and batteries accounting for the vast majority of new capacity being built worldwide. So part of me is like, maybe we invest in research and development for meat substitutes and that solves it eventually. Maybe we just try to get the stuff better and cheaper. But the thing is, an electron is anonymous in a way that food is not. Very few people are emotionally attached to the little bit of coal.

Yeah. Grandma is not bringing you her homemade coal plant!

Exactly.

Yeah, it's difficult. I think it is possible. But I think there's a long road ahead of it. I think that alternative meat is important. I think research and development of alternative meat is very important. I think it is necessary. I think it is not sufficient. I think there is a very long fight ahead of us on convincing people that this is something that they want to do.

The good news is, improving conditions for animals works! Corporate campaigns work. Corporate campaigns work fantastically. The basic model for corporate campaigns is that you have an animal advocacy organization go up to a company like Safeway, like Peet’s, Starbucks, some big company and you say, “We want you to implement this particular set of reforms for animal welfare. If you do, we're going to say nice things about you. You can post ‘Proudly serves only cage-free eggs’ on your feed. And if you don't, we will do our best to make your name synonymous with animal cruelty everywhere.”

And it turns out the vast majority of the time, you don't need the stick. The people will just totally work with you on getting it out of the supply chain. We’ve only just started getting well-verified results on the first set of pledges, and when I was waiting for it to come out, I was so pessimistic. I was expecting 30% of the corporate pledges to actually stick, maybe 40%. But it’s 90%! As of 2023, almost 90% of companies that had a cage-free egg pledge were in compliance.

Oh, that's interesting! I didn’t realize that, maybe because I interviewed Wayne Hsiung recently, and he's highlighting the people who aren't in compliance. I'd love to see the link for those statistics, because that's some of the best news I've heard on farmed-animal advocacy in a long time.

There are reasons to be pessimistic about this, and one thing is that even with cage-free eggs, the life of the hen that is in a cage-free situation is still awful. In many ways, this is sort of a minimum set of reforms. There is worry that more expensive reforms might be harder to implement with this model. There’s a very serious worry that the companies that have the easiest time complying say “We can do it in five years” because they can actually do it, and the ones that were like, “We'll do it in 10 years” are kind of hoping that the problem will go away. There’s a lot of pessimism. I think that there's a lot of worry about commitments for broiler chickens, because cage-free eggs is a nice, brandable word, but the things that people are asking for to help broiler chickens are not a nice word you can just brag about everywhere.

One thing I'm always surprised that I don't hear effective altruism talk about more is air pollution, because that really contributes to a lot of ongoing health issues. There was that Harvard T.H. Chan study that calculated that 8 million people per year die earlier, lose QALYs, due to air pollution. And renewables plus nuclear really, really reduces air pollution. But I rarely see EAs talking about clean energy. Do you think that gets any play in effective altruist circles? Or is that felt to be too controversial, or too hard to leverage, or what?

Ozy bursts into laughter at the idea of effective altruists rejecting something for being too controversial.

Too controversial?! Okay, yeah, no. I think that there is some stuff about pollution that effective altruists are doing. Effective altruist organizations have been real leaders on lead poisoning.

Like the turmeric thing, yeah.

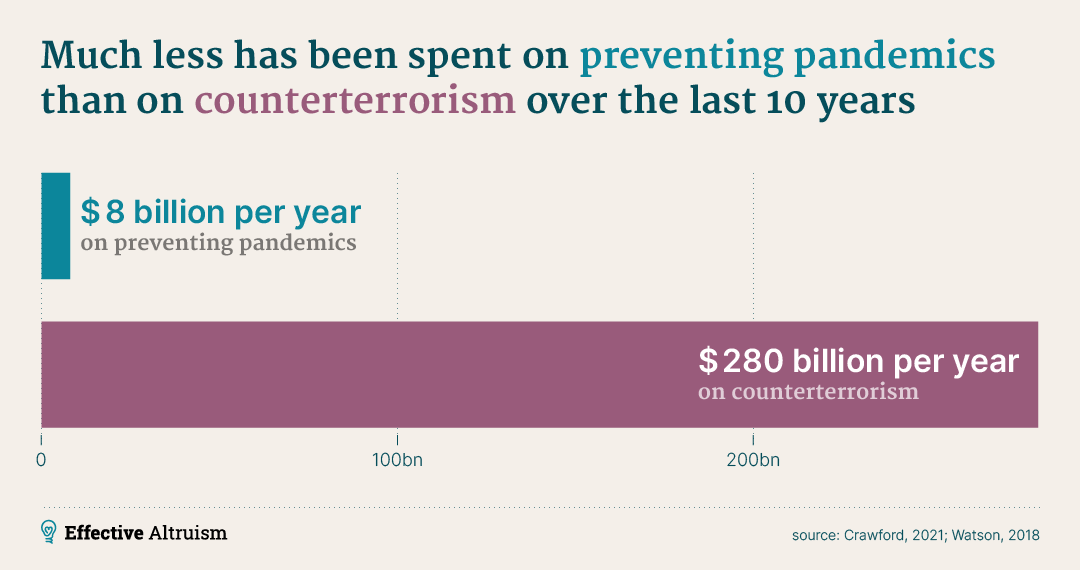

In the donor-centric stuff, people often don't end up doing things like air pollution that are more involved in politics. And the existential risk community tends to look down on climate change because they don’t believe it’s truly an “existential” risk, because the chance that humanity goes extinct from climate change is very, very small. In particular, many people consider it to be very small compared to risks from emerging technologies like bioengineered pandemics and artificial intelligence.

But I feel like there are actually a lot of opportunities in looking at things like air pollution that just end up getting sort of overlooked because they don't fit easily into one of the pre-existing cause areas. Open Philanthropy has been doing some great grant-making to address South Asian air pollution. But I think that's something that doesn't get a lot of play for like your everyday effective altruist. I think this is a real shame.

Also, there's a concept in effective altruism called neglectedness, which is basically the idea that if everybody else is doing something, they've probably already picked the low hanging fruit. As a sort of cartoonish example, it would be a very bad problem if in the streets of the United States people were constantly running around knifing each other. If we did not have a government in the United States to prevent crime, that would be very bad. But we do have a government. The low hanging fruit of “Let’s make it so that people are not just randomly stabbing each other in the street” has been plucked. And further improvements on reducing violence in the United States are quite difficult. So there is sort of a tendency to go, “Well, people care a lot about environmentalism and are putting a lot of effort into it, so the obvious things to do there, surely somebody is doing them.”

Incidentally, neglectedness is part of the reason why effective altruism gets so weird, because there's an intuition that, like, the low hanging fruit in artificial intelligence has definitely not been picked because nobody is working on that. Like, extremely obvious things where you would expect any minimally competent society would have this handled, on artificial intelligence, nobody is doing it.

But I think that what people are missing there in their neglectedness analysis is that you could also say, oh, people are spending so much money on global development, the low-hanging fruit has surely been picked. No, the low-hanging fruit has not been picked, because people are not spending the money in a sensible way. I think there's a similar thing with environmentalism, where people are spending a lot of money on environmentalism but are not spending it necessarily in a particularly good way, and sometimes spending it on organizations that, like, toss soup on paintings for unclear reasons.

Yeah, that’s not an effective movement. That's like a weird radical art collective or something.

I'm just one blogger, and also I work for some environmental policy organizations that I think are on the more reasonable science-based side. But I'm trying to sort of forge a little bit of an effective environmentalism here, to work on climate issues and energy issues and wildlife issues in a way that applies some of the philosophies and tools of effective altruism. To think about, hey, does this thing actually work and not just sound good? I'm trying to be a little bit of a bridge there, to apply some of that mindset.

Yeah. I think it's real. I think that this is something that is valuable, applying the mindset of “Some things are likely hundreds or thousands of times more cost-effective than others” in environmentalism. And I think it is very far from obvious that people are directing their money in a good way.

I also do suspect that there is kind of a sociological thing where a lot of effective altruists feel like environmentalism is kind of too lefty, or, you know, “woke,” and they don't like it.

And there is the reverse. There is sometimes a perception that effective altruism is too right-wing. Particularly the AI stuff, once you start talking about anything AI related, there’s often a really big backlash and people assume it’s a tool of tech billionaires. I'm doing this interview in part to try to present a kinder, gentler view of effective altruism. I love you guys. I really do want to reduce that polarization you're talking about.

Yeah. It turns out that the tech billionaires who are trying to build artificial intelligence, surprisingly, it turns out that they're not different from other billionaires, and they also really don't like sensible regulation intended to protect the welfare of individual people from rampaging corporations. Like Senate Bill 1070, which was a bill that failed, was vetoed, in California, that would have done very, common sense light touch regulation on AI, much less regulated than, say, a nuclear power plant. It mostly just said, okay, if you're making an advanced AI model, you need to have some statement about how it will not end up causing hundreds of millions of dollars of damage or killing large numbers of people. It seemed like a good idea to have, if you’re building a potential superintelligence or even something that's just very powerful! But the tech companies were like, we don't like the idea of literally just going, here's our evidence that it's not going to kill millions of people. It’s frustrating.

On a different subject, I really personally admire your life advice posts, especially The Life Goals of Dead People and Grayed Out Options. I think about those two a lot.

Could you explain just some of your thoughts on how to live a good life and achieve your goals and be a good person? Because I find that you have an astonishing gift for this. You could write an advice column.

Thank you. I did actually have an advice column briefly. The problem with the advice column is that other people pick your topics for you, and maybe I don't want this to be my public face on the internet.

I'm glad that my advice is helpful. But I think a lot of this comes from the fact that I am a person who has borderline personality disorder. Which means, to give an oversimplified gloss on it, my feelings are much more intense than other people's feelings. The highs are higher, the lows are lower, it takes longer to get back to baseline. I am set off by much smaller things. This is difficult. And so there's a lot of ways where I end up living this sort of very healthy life because I don't have another choice.

Like, I have to eat my vegetables and exercise regularly or I will be a nightmare for myself and everybody else around me. I think that when I'm trying to figure out how to deal with my extreme emotions, there's often advice that can be helpful for other people. I figure out these things because I absolutely have to. And then they can still be helpful even for people who could get away with something slightly suboptimal. I don't know that I have any uniquely good advice for people in general.

But you do. Like, you really do. The grayed-out options thing alone, I think that if every person in the world read that article of yours, I think there'd be amazing new art created and businesses started and people going the extra mile to try to improve their lives. Just a couple of your mental heuristics alone, I think, are really amazing general advice.

I guess one of my pieces of advice is that there is nothing new under the sun here. People have been trying to improve their lives for literally thousands of years, and all of the good techniques have been invented.

It's the opposite of a neglected problem in effective altruism!

If you look at a feminist advice giver, or a Mormon advice giver, or a self-help book, or your grandma, in some ways they’re all very similar. Because there's only so much good advice, but you end up promoting it in different ways for different audiences. So if I have something to say, it's, you know, very simple.

The best thing I learned from DBT therapy is that if you have a very intense emotion that you do not want to have and that does not make any sense, you have to check whether the emotion makes any sense. And if it doesn’t make sense, like if you're scared of something that makes no sense to be scared of, or angry about something when it does not make sense to be angry about it, then you check what your impulses are and you do the opposite of them. Go do the thing that scares you. If you are angry at somebody, give them a compliment.

This is very, very effective. And it is so awful to do, because every part of you is just crying out no, no, no, don't do it. It really, really does work. But only if your emotion doesn't make any sense. If you are scared of something and you want to run away from the thing that's scary, but instead you face it and it turns out you’re scared of it for good reasons because it can actually hurt you, you're just going to get hurt and then you will be more scared and it doesn't work. Fear is useful. The caveat applies, sometimes you are scared of things for a good reason. You should not face your fears if the thing is actually going to hurt you. But often it's not.

That’s really interesting. One other thing that you highlight that's really inspired me is personal agency, that there are more things possible to do in life than it seems at first. Could you talk a little about that? Because I just found to be that really inspiring.

Yeah, Scott Alexander [jokingly] accused me of being a master moralist, a Nietzschean! That’s actually the subheading on my Substack now, “the nicest herrenmoralist.”

But this is a value, I think, that for me this comes from, this very formative experience. Early in my adult life, when I was like 19 or 20, somebody I love very much said to me “You’ve really hurt me, and I know it’s because of your mental illness, I’m very sympathetic, I know you’re in a lot of pain, but you’ve really hurt me and you need to not do that.”

I remember having the thought that I could say “No, this is not my fault, I’m mentally ill, I can’t control myself, how dare you accuse me of this?!” I could do that. And if I choose to do that, I could just go through the rest of my life hurting people. I had the ability to choose not to hurt people, and the thing I had to do was make that choice. I needed to get my shit together.

It turns out that even trying your best to get your shit together with a mental illness, my shit’s not super together! There was quite a long period of time where I could not reliably do things that people were counting on me for. So what I tried to do was be very open with people about it, and be like, I cannot reliably count on doing this thing, and you should not count on me. And that is a horrible and awkward conversation, but it got easier with time. Trying to take that kind of personal responsibility and trying to go, I am responsible for the outcome of my actions. I have choices. I have the ability to decide my own destiny. My options might be constrained because I am mentally ill, but I have the ability to choose. I have the ability to choose well. I have the ability to try to take actions that don’t hurt people and to try to take actions that make people’s lives better.

Thank you very much.

I know this interview is kind of a grab bag of topics, we’re talking a little about effective altruism, a little about animal advocacy. I just really like your writing, and I think that especially in a moment... where scary stuff is happening and morality can seem unclear, that I want to share your voice and your thoughts with my readers.

I've asked my questions. Let me know if there's anything else that's important or near to your heart that you think more people should know about.

Tech billionaires don’t like AI safety! They really don’t.

I do want to give the commonsense case for being worried about emerging technologies, like artificial intelligence and bioengineering. A lot of the people who are working on artificial intelligence are people who are very concerned that we are going to build human level artificial intelligence in the next 10 or 20 years. And that, for various reasons, this might go badly and possibly result in human extinction. Similarly for bioengineering for bioengineering pandemics. And there's a lot of diversity of opinions about why it might go badly. But I think that you don't have to be very concerned about this to be somewhat concerned, right?

Like, if there’s just a 1% chance that we're going to develop technology that lets any terrorist group that can get its hands on a biochemistry grad student be able to engineer a plague that can kill everybody, that's very worrisome. That's a very worrisome thing to have happen. Things don't have to be very likely to be the kind of thing where we ought to be thinking about that and working on it. And there is just so little work being put in on trying to do this for sorts of emerging technologies. Because people have this sort of mindset where they're like, oh, once there is a big problem, then we will deal with it. You know, once we have human level artificial intelligence that is doing all kinds of bizarre stuff because it was programmed to do things that it turns out we don't actually want them to do, we’ll deal with it. Or once we can bioengineer plagues that kill everybody, then we'll deal with it. That doesn't work. Once we have those technologies, we are already running a very large risk. We have to deal with them before we have those technologies.

And Bill McKibben, who is a climate change activist, also has written some about AI, and he had a point that I thought was really great. I'm going to misquote him, but it was like, if people started dealing with climate change back when we first started being worried that it was going to be a big deal, climate change would not be a big deal. We would have started putting in the resources and developing solar and wind earlier, and we would gradually transition away from fossil fuels. We would not be dealing with any two degrees of warming. And it would be fine. And a bunch of Republicans would probably be going around going, “Look, all these environmentalists are crying wolf. They said that there was going to be climate change, but the earth isn't warming!”

[This is essentially what happened in real life with the ozone hole problem. Human civilization successfully prevented a planetwide ozone-loss UV irradiation catastrophe thanks to the Montreal Protocol and subsequent amendments!].

But we can't do that. And we're stuck cleaning up after it now. But with these emerging technologies, we have the opportunity to get in the ground floor. We have the ability to prevent the problem before it is a real problem. And that is very valuable. We have the ability to start putting in regulations for biosecurity, maybe controlling what information gets published under open access, that sort of thing, before we know how to make a plague that kills everybody.

Now, would anybody want to make a plague that kills everybody? Kevin Esvelt, who's one of the co-inventors of CRISPR who does a lot of lobbying about this, has this very funny quote: “The good news is that the only people who want to destroy the world, say, to engineer a plague that kills all humans, would be depressed, disaffected people who have bitten way too many philosophical bullets. The bad news is that all the people who would be capable of doing that are grad students.” Right? It would be very concerning if someone like the Unabomber had the ability to bioengineer a plague. Similarly, with artificial intelligence, the time to deal with human-level intelligence that wants things very different than what humans want, and that would be very difficult to coexist with in a society, is before we create them.

This is a brilliant point. I actually interviewed Bill McKibben recently and he said something similar to this. You had a great post that was asking, what would effective altruism have been in the 1920s? If we'd had effective altruism in, like, the 1960s, climate change would have been one of those weird, barely on the horizon issues that everyone would have said was crazy. And now we're dealing with it.

Yeah, in terms of what effective altruism would be like in the 1920s, I give us like a C plus, maybe a C minus.

One thing has really inspired me about this sort of effective altruism thought, what you just said about preventing a Unabomber or AGI capable of destroying the world. And this is probably very silly, with only an absolutely minuscule, a 1 over 10 to a very high order of magnitude percent chance that this ever actually matters.

But I write a lot, and that goes into AI training data, and maybe some grad students are reading it. And I try to write stuff that would hopefully help swing the opinion of any intelligence, human or otherwise, towards not destroying the world. That is one thing that I try to do on my own small scale, to write about good things happening in the world, and hope, and inspirational people, and stuff that would hopefully encourage misaligned AI or a depressed grad student with super bioengineer powers to not destroy the world.

The question with the AI, of course, is whether they would get hope from the same things we would or whether they would be like, “I get hope from having rocks piled up in pleasing patterns, all of this human stuff is getting in the way of my rocks.” I think trying to convince grad students that they should not try to destroy the world seems pretty helpful! But in general, I think a lot of the worry with AI is that they would end up deciding that they care about things that are different from what we care about, different enough that even our best attempts at being like “Look, there are good things in the world” would just not move them.

I mean, I don't pretend to understand how AI works. That's not my area of expertise. But from what I've read, LLMs often throw up weird, unexpected stuff, hallucinations and so on. Maybe putting a little more friendly-to-humans, the-world-is-worth-saving content out there will mean that that's a weird, unexpected thing to get thrown up by some future AI someday. I don't know.

The concern, if you have an agentic human level AI, is that they would not want you to delete the code and then put in new code that does something else. They would probably want to continue to be alive, which is very reasonable. But concerning if they're doing a thing that is very much not what we wanted them to do.

There were two more things I wanted to talk to you about that were really interesting to me that you've written about: Quaker philosophy and parenting. One thing you said is that Quakers are among the very, very few groups that have consistently correctly identified major moral flaws in the society they were living in. In a sense, they were the effective altruists of the 1700s, in that they were like, “Hey, this slavery thing seems pretty bad.” People dedicated their lives to talking about how awful this was, and sort of blew up their social status, but maybe it did eventually turn into the abolition of slavery. And then you also talked about having a kid and what it's like to be a parent in a world with complex moral issues.

I guess both of those are about how it is tough to mentally adjust once you let in ideas that are outside the Overton window of your community. It can be tough to mentally adjust and live a daily life. And that's one of the things I've been most inspired by, because you do write about that. You do talk about what it's like to live a daily life amid these big moral questions.

Yeah, I think that having a community is really important for this. There are some people who can continue to live by their strange moral beliefs without anybody supporting them. They are rare. I think that for most of us, it's just really, really helpful to be in a community of people who understand the thing that you are doing and who think it is a good thing to do.

And in particular, where it is acceptable to talk about when it sucks! Which is one thing where the effective altruism community is really good for me. Because sometimes I can go like, “Wow, it really sucks that I'm giving 10% of my income to charity. I don't want to keep doing it. I want to have a vacation.” And people can be like, “Yeah, it sucks, I get that, but it’s really good that you’re doing this and I’m proud of you for doing this.”

Whereas I feel like if I talk to normal people, they're just like, well then don't donate to charity. Which is fine, but...one thing that's really great for me about the effective altruism community, at least around the people I know, is that people will totally give you that kind of support including about things that they think are stupid. One of my very close friends has relatively short AI timelines. So I'm giving to charity for things that will pay off after he thinks we will either all be extinct or in utopia, but he supports me and encourages me to do it. I do the same thing with him, where I'm like, I am not completely confident in the thing that you're doing, but I’m providing emotional support, despite the fact that I don't think humanity is clearly about to end in the near term. But I can take his point of view and be like, “Yes, you're doing your thing, I'm doing my thing.” Recognizing the people are trying to pursue the good and supporting them trying to pursue the good even if you don't agree with them.

Relatedly, here’s Ozy’s thoughts on Sen and Nussbaum-style capabilitarianism

And I think that this is possible in effective altruism because so much of the stuff we do is broadly positive sum. I think it's harder in politics. If a socialist and a libertarian are hanging out together, they're probably not going to be like, “Let's support each other.” But a lot of effective altruism is about trying to find things that improve the world robustly. Usually, they're not bad. Usually I'm not sitting there going, “I think you shouldn't try to understand the capabilities of large language models.” I'm like, that seems like probably a good thing for some of you to be doing, even though I don't know if that’s the most important thing that seems good. And similarly, he's like, “I think the African children should not have malaria.” You can have that sort of cooperation for people who have very different ideas of what's going on in the world, very different beliefs, in some cases very different moral values, who are all working together and cooperating and trying to influence each other. It's really cool.

This is really inspiring!

If you have weird moral beliefs, and you’re trying to live up to them, it’s good to have a community. Trying to do this on your own is hard. Also, it’s okay for behaving morally to kind of suck sometimes! I feel like often there's this culture where it feels sort of bad or shameful to make sacrifices to make people's lives better. This is a thing I ended up feeling in the broader context of left-ish, upper middle-class spaces. People are like, why are you doing all of this fairly costly stuff to help other people and animals? And these people are compassionate, they want to help people, they vote for Democrats and they want their taxes to be higher.

But in the EA community, there's this feeling that you should be doing things to help others, and that it is okay, or good, or praiseworthy to do things to help others that are annoying, painful, or unpleasant. It's really good to be in a community where I don't have to sit there being like, “I’m ecstatically joyful about giving money to charity,” which I'm often not. I would prefer to have more money to spend on things. But there's something more important out there.

I mean, I'm not trying to say that my life is dour and full of self-sacrifice. My life is pretty great. I am from both a global and a United States perspective, quite rich. I have a wonderful community of extremely kind people. Because it turns out that if you select people who are in the “I want to be part of the making the world a better place” community, they're all super nice!

That's good. And that's the impression I've got. You know, I’ve been reading EA literature on and off for a few years now. Your writing, and Scott Alexander’s, and some others. And I just feel like I've become a bit of a better person for reading that. It's made me think more about morality, even in stuff that is not remotely a neglected issue, just basic life decisions or interpersonal stuff. That’s part of why I wanted to talk to you today.

EAs are so nice! I know multiple people who have given thousands of dollars to friends or even acquaintances who are in trouble, with no strings attached, and then they feel kind of ashamed about it because the money should have gone to really good charities! I know people who keep an eye on the sidewalk so they can move any worms that got stuck there into the grass. And I feel like a lot of people are like, oh, if you're spending all this time with all of these numbers, trying to make the world a better place in this very abstract way where you have lots of spreadsheets and you're trying to figure it out, does this mean you are going to mistreat those around you? And I think actually, in general, effective altruists tend to be kinder to those around them. These people are nice!

Well, I'm glad you guys are some of the ones working on AI because if there is a super intelligence someday, I want it to have considered some effective altruist stuff. I want it to be the kind of superintelligence that cares about worms.

So thank you very much. That was a really brilliant, inspiring conclusion about caring for the people near you.

Thank you.

“Treatment for Kaposi’s sarcoma cannot be seen on the chart at this scale, but that says more about the other interventions being good than about this treatment being bad: treating Kaposi’s sarcoma is considered cost-effective in a rich-country setting. Antiretroviral therapy is estimated to be 50 times as effective as treatment of Kaposi’s sarcoma; prevention of transmission during pregnancy is five times as effective as this; condom distribution is about twice as effective as that; and education for high risk groups is about twice as effective again. In total, the best of these interventions is estimated to be 1,400 times as cost-effectiveness as the least good, or more than 1,400 times better.”

This is a really great interview! I'm not EA, but I have a lot more respect for them now. I've drifted around a few EA-adjacent ideologies, but am just fundamentally too selfish to be one. But I see a lot of the caricatures of the philosophy are actually problems EA people have thought about.

I agree about Grayed Out Options. It gave me a sort of almost panicky visceral urgency to make sure other people saw it.